Creating a Living Data Dictionary in Salesforce

A data dictionary is a list/document used to describe a system and the fields and attributes associated to the system. A lot of times this can be an excel spreadsheet or a word document to describe the system, the fields, the attributes associated to the fields, etc. A Salesforce data dictionary is a very important yet can be very taxing to keep up to date as the org changes. Instead of using a static method(such as a spreadsheet or document) that constantly needs to be updated resulting in potential errors, and not providing flexibility to change, create a system that automatically updates itself.

Through some apex you can create a living data dictionary within Salesforce that can:

- View all objects in the org

- View all fields associated to an object

- View record types associated to an object

- Identify what the record type specific picklist values

- Create a more collaborative approach to system definitions

- Always be up to date with the latest changes

- Be implemented in your CI/CD pipeline

- Control who can update or view the dictionary

- Allow chatter posts on objects, fields, picklist values, etc.

This article will cover this automation through creating several objects, fields, apex classes, and a lightning web component. Afterwards we will have a self updating data dictionary with endless possibilities to fit your organization’s needs. Furthermore, this can be implemented in a custom sandbox specifically for the data dictionary which would provide the most flexibility to use in a CI/CD pipeline, control permissions, and create any other helpful tools.

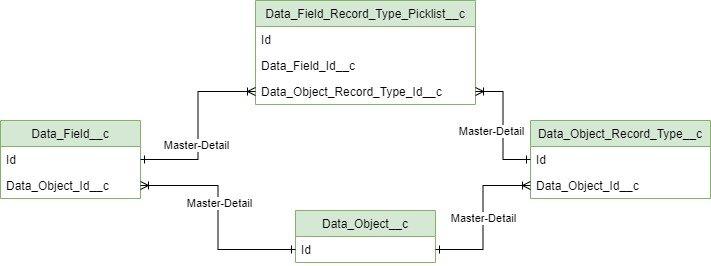

First to start out with the data model. This data dictionary is going to have several objects: Data_Object__c, Data_Field__c, Data_Object_Record_Type__c, Data_Field_Record_Type_Picklist__c.

Data Dictionary Data Model

After reviewing the data model let’s get to work.

Data Object and batch

Create a custom object; Label: Data Object, API Name: Data_Object__c. This object will hold a record for each object with the salesforce schema and the metadata information for the objects in the org.

After creating the Data_Object__c create the following fields:

- Label

- Data Type

- Custom Definition

- RichTextArea(32768)

- Custom Setting

- Checkbox

- Custom

- Checkbox

- Feed Enabled

- Checkbox

- Has Subtypes

- Checkbox

- Label Plural

- TextArea(255)

- Label

- TextArea(255)

- Mergeable

- Checkbox

- Prefix

- TextArea(255)

- Record Type Dev Names

- RichTextArea(32768)

- Record Type Names

- RichTextArea(32768)

Data Dictionary Object Batch Class

Now with the object and all of the fields created we can move to the creation of the batch. This batch will go through all of Salesforce’s objects, create a record for each, and add associated attributes to the record. Create the apex batch:

public class DataDictionaryObjects_Batch implements Database.Batchable<SObjectType>, Database.Stateful {

//setup stateful - get the current list of DataObjects to check against changes, get

private List<Data_Object__c> currentDataObjectList = new List<Data_Object__c> ([Select Custom__c, Custom_Setting__c, Feed_Enabled__c,

Has_Subtypes__c, Label_Plural__c, Mergeable__c,

Name, Record_Type_Dev_Names__c, Record_Type_Names__c

from Data_Object__c]);

private List<String> currentObjectNamesList = new List<String>();//used to compare for changes

private Map<String, Data_Object__c> currentObjMap = new Map<String, Data_Object__c>();

public Iterable<SObjectType> start(Database.BatchableContext bc){

//put all current object records into map and list

for(Data_Object__c objects : currentDataObjectList){

currentObjectNamesList.add(objects.Name);

currentObjMap.put(objects.Name, objects);

}

return Schema.getGlobalDescribe().values();//grab full all object describes

}

public void execute(Database.BatchableContext bc, List<SObjectType> scope){

List<Data_Object__c> newDataObjectList = new List<Data_Object__c>();

List<Data_Object__c> updatedDataObjectList = new List<Data_Object__c>();

//Loop through all the SObjectTypes

for ( Schema.SObjectType typ : scope ) {

String sobjName = String.valueOf(typ);

Schema.DescribeSObjectResult objectResult = typ.getDescribe();//get describe of object

//put

Boolean custom = objectResult.isCustom();

Boolean customSetting = objectResult.isCustomSetting();

Boolean feedEnabled = objectResult.isFeedEnabled();

Boolean hasSubTypes = objectResult.getHasSubTypes();

String labelPlural = objectResult.getLabelPlural();

Boolean mergeable = objectResult.isMergeable();

String prefix = objectResult.getKeyPrefix();

String label = objectResult.getLabel();

String name = objectResult.getName();

list<Schema.RecordTypeInfo> objectRecordTypeInfoList = new list<Schema.RecordTypeInfo>();

objectRecordTypeInfoList = objectResult.getRecordTypeInfos();//get recordtypeinfo

String recordtypename;

String recordtypedevname;

Integer c = 0;

List<String> recordTypeNameList = new List<String>();

List<String> recordTypeDevNameList = new List<String>();

//Loop record Type info and create get record type name and dev name

for(Schema.RecordTypeInfo objectRecordTypeInfo : objectRecordTypeInfoList){

recordTypeNameList.add(objectRecordTypeInfo.getName());

recordTypeDevNameList.add(objectRecordTypeInfo.getDeveloperName());

}

//join record type lists into a string

recordtypename = String.join(recordTypeNameList, ', ');

recordtypedevname = String.join(recordTypeDevNameList, ', ');

//get record and check for any updates

if(currentObjectNamesList.contains(sobjName)){

Data_Object__c checkObjectUpdate = currentObjMap.get(sobjName);

//remove record from map if any are remaining then the objects have been deleted

currentObjMap.remove(sobjName);

//check if there are any changes/updates

if(checkObjectUpdate.Custom__c != custom

|| checkObjectUpdate.Custom_Setting__c != customSetting

|| checkObjectUpdate.Feed_Enabled__c != feedEnabled

|| checkObjectUpdate.Has_Subtypes__c != hasSubTypes

|| checkObjectUpdate.Label_Plural__c != labelPlural

|| checkObjectUpdate.Mergeable__c != mergeable

|| checkObjectUpdate.Record_Type_Dev_Names__c != recordtypedevname

|| checkObjectUpdate.Record_Type_Names__c != recordtypename){

checkObjectUpdate.Custom__c = custom;

checkObjectUpdate.Custom_Setting__c = customSetting;

checkObjectUpdate.Feed_Enabled__c = feedEnabled;

checkObjectUpdate.Has_Subtypes__c = hasSubTypes;

checkObjectUpdate.Label_Plural__c = labelPlural;

checkObjectUpdate.Mergeable__c = mergeable;

checkObjectUpdate.Record_Type_Dev_Names__c = recordtypedevname;

checkObjectUpdate.Record_Type_Names__c = recordtypename;

updatedDataObjectList.add(checkObjectUpdate);

}

}else{

//create a new Data Object record

Data_Object__c newObject = new Data_Object__c(

Custom__c = custom,

Custom_Setting__c = customSetting,

Feed_Enabled__c = feedEnabled,

Has_Subtypes__c = hasSubTypes,

Label_Plural__c = labelPlural,

Mergeable__c = mergeable,

Name = name,

Prefix__c = prefix,

Record_Type_Names__c = recordtypename,

Record_Type_Dev_Names__c = recordtypedevname,

Label__c = label

);

newDataObjectList.add(newObject);

}

}

insert newDataObjectList;

update updatedDataObjectList;

}

public void finish(Database.BatchableContext bc){

delete currentObjMap.values();

DataDictionaryFields_Batch DDFB = new DataDictionaryFields_Batch();//batch for creating all field records

Id batchId = Database.executeBatch(DDFB);

}

}After the Data Dictionary Objects batch is created we will move to creating the Data Fields and the Data Record Type objects and classes.

Data Fields Object and Batch

Create a custom object; Label: Data Field, API Name: Data_Field__c. This object will hold all of the data field record metadata information for each of the objects in the org.

After creating the Data_Field__c create the following fields:

- Label

- Data Type

- Data_Object__c

- Master-Detail(Data Object)

- AI_Prediction_Field__c

- Checkbox

- Auto_Number__c

- Checkbox

- Business_Owner_Id__c

- Text Area(255)

- Business_Rules__c

- Long Text Area(32768)

- Business_Status__c

- Text Area(255)

- Byte_Length__c

- Number(18)

- Calculated_Formula__c

- Long Text Area(32768)

- Calculated__c

- Checkbox

- Cascade_Delete__c

- Checkbox

- Controller__c

- Text Area(255)

- Controlling_Field_Definition_Id__c

- Text Area(255)

- Custom__c

- Checkbox

- Data_Type__c

- Long Text Area(131072)

- Default_Value_Formula__c

- Long Text Area(32768)

- Default_Value__c

- Text Area(255)

- Defaulted_On_Create__c

- Checkbox

- Dependent_Picklist__c

- Checkbox

- Deprecated_and_Hidden__c

- Checkbox

- Description__c

- Long Text Area(32768)

- Digits__c

- Number(18)

- Durable_Id__c

- Text Area(255)

- Entity_Definition_Id__c

- Text Area(255)

- External_Id__c

- Checkbox

- Extra_Type_Info__c

- Text Area(255)

- Filterable__c

- Checkbox

- Formula_Treat_Null_Number_As_Zero__c

- Checkbox

- Groupable__c

- Checkbox

- HTML_Formatted__c

- Checkbox

- Id_Lookup__c

- Checkbox

- Inline_Help_Text__c

- Long Text Area(32768)

- Is_Api_Sortable__c

- Checkbox

- Is_Compact_Layoutable__c

- Checkbox

- Is_Compound__c

- Checkbox

- Is_Field_History_Tracked__c

- Checkbox

- Is_High_Scale_Number__c

- Checkbox

- Is_Indexed__c

- Checkbox

- Is_List_Filterable__c

- Checkbox

- Is_List_Sortable__c

- Checkbox

- Is_List_Visible__c

- Checkbox

- Is_Name_Field__c

- Checkbox

- Is_Polymorphic_Foreign_Key__c

- Checkbox

- Is_Workflow_Filterable__c

- Checkbox

- Label__c

- Text Area(255)

- Last_Modified_By_Id__c

- Text Area(255)

- Last_Modified_Date__c

- Text Area(255)

- Length__c

- Number(18)

- Local_Name__c

- Text Area(255)

- Name_Field__c

- Checkbox

- Name_Pointing__c

- Checkbox

- Name__c

- Text Area(255)

- Namespace_Prefix__c

- Text Area(255)

- Nillable__c

- Checkbox

- Notes__c

- Long Text Area(32768)

- Object_Manager__c

- Formula (Text)

- Permissionable__c

- Checkbox

- Picklist_Values__c

- Long Text Area(131072)

- Precision__c

- Text Area(255)

- Reference_Target_Field__c

- Text Area(255)

- Reference_To__c

- Long Text Area(131072)

- Relationship_Name__c

- Text Area(255)

- Relationship_Order__c

- Number(18)

- Restricted_Delete__c

- Checkbox

- Restricted_Picklist__c

- Checkbox

- SObject_Field__c

- Text Area(255)

- Scale__c

- Number(18

- Search_Prefilterable__c

- Checkbox

- Security_Classification__c

- Text Area(255)

- Soap_Type__c

- Text Area(255)

- Sortable__c

- Text Area(255)

- Type__c

- Text Area(255)

- Unique__c

- Checkbox

- User_Story__c

- Text Area(255)

- Write_Requires_Master_Read__c

- Checkbox

Data Dictionary Fields Batch

Now that the fields are created we need to create the data fields batch class. This class will go through all object schemas check to see if a field record is already created, if so check to see if any of the tracked fields have been created, and delete all fields that have been deleted from the org. If fields are not apart of the tracked fields metadata these are additional fields that are associated to the record for additional attributes that you decide which are not apart of the schema. An example above the User_Story__c.

global class DataDictionaryFields_Batch implements Database.Batchable<SObjectType> {

global Iterable<SObjectType> start(Database.BatchableContext bc){

List<SObjectType> scopeList = new List<SObjectType>();

Schema.SObjectType accountType = Schema.Account.sObjectType;

Schema.SObjectType testType = Schema.Data_Object__c.sObjectType;

scopeList.add(accountType);

//loop through list of all objects in Salesforce org aside from the ignore type objects

/*for(SObjectType scopeObject :Schema.getGlobalDescribe().values()){

string scopeText = string.valueOf(scopeObject);

boolean ignore = scopeText.endsWith('History') || scopeText.endsWith('ChangeEvent') || scopeText.endsWith('Share');//the ChangeEvents are mirrors to the object and share/history have a common schema

if(!ignore){

scopeList.add(scopeObject);

}

}*/

return scopeList;

}

global void execute(Database.BatchableContext bc, List<SObjectType> scope){

//create list and loop through to get the list of objects in scope

List<String> scopeObjectsList = new List<String>();

for(Schema.SObjectType objType : scope){

scopeObjectsList.add(String.ValueOf(objType));

}

//get current list of Data Fields

List<Data_Field__c> dataFieldList = [Select Data_Object__r.Name, Data_Object__r.Id,Name,Durable_Id__c,Name__c,Entity_Definition_Id__c,Namespace_Prefix__c,Local_Name__c,

Length__c,Data_Type__c,Extra_Type_Info__c,Calculated__c,Is_High_Scale_Number__c,HTML_Formatted__c,Is_Name_Field__c,Nillable__c,

Is_Workflow_Filterable__c,Is_Compact_Layoutable__c,Precision__c,Scale__c,Is_Field_History_Tracked__c,Is_Indexed__c,Filterable__c,

Is_Api_Sortable__c,Is_List_Filterable__c,Is_List_Sortable__c,Groupable__c,Is_List_Visible__c,Controlling_Field_Definition_Id__c,

Last_Modified_Date__c,Last_Modified_By_Id__c,Relationship_Name__c,Is_Compound__c,Search_Prefilterable__c,Is_Polymorphic_Foreign_Key__c,

Business_Owner_Id__c,Business_Status__c,Security_Classification__c,Description__c,AI_Prediction_Field__c,

Calculated_Formula__c,Default_Value__c,Deprecated_and_Hidden__c,Formula_Treat_Null_Number_As_Zero__c,Inline_Help_Text__c,Permissionable__c,

Reference_To__c,Restricted_Picklist__c,SObject_Field__c,Auto_Number__c,Cascade_Delete__c,Default_Value_Formula__c,Digits__c,Label__c,

Name_Field__c,Picklist_Values__c,Type__c,Byte_Length__c,Controller__c,Defaulted_On_Create__c,External_Id__c,Name_Pointing__c,Unique__c,

Relationship_Order__c,Reference_Target_Field__c,Restricted_Delete__c,Soap_Type__c,Write_Requires_Master_Read__c,Id_Lookup__c,Custom__c,

Dependent_Picklist__c, isDeleted, CreatedDate, CreatedById, LastModifiedDate, LastModifiedById, SystemModstamp

from Data_Field__c where Data_Object__r.Name IN :scopeObjectsList and Deleted__c = false];

//create DataField Map

Map<String, Data_Field__c> dataFieldsMap = new Map<String, Data_Field__c>();

//loop through and create a key for the current object fields also add to map for deleting

for(Data_Field__c dataField : dataFieldList){

String dataFieldKey = dataField.Name + '|' + dataField.Data_Object__r.Name;//Field names are not org unique, this will make them unique

dataFieldsMap.put(dataFieldKey, dataField);

}

List<String> dataFieldsKeyList = new List<String>(dataFieldsMap.keySet());

//get field definitions

List<FieldDefinition> fieldDefinitionList = new List<FieldDefinition>([Select Id,EntityDefinition.QualifiedApiName, DurableId, QualifiedApiName, EntityDefinitionId,

NamespacePrefix, Label, Length, DataType,

ExtraTypeInfo, IsCalculated, IsHighScaleNumber, IsHtmlFormatted, IsNameField, IsNillable, IsWorkflowFilterable,

IsCompactLayoutable, Precision, Scale, IsFieldHistoryTracked, IsIndexed, IsApiFilterable, IsApiSortable,

IsListFilterable, IsListSortable, IsApiGroupable, IsListVisible, ControllingFieldDefinitionId, LastModifiedDate,

LastModifiedById, RelationshipName, IsCompound, IsSearchPrefilterable, IsPolymorphicForeignKey, BusinessOwnerId,

BusinessStatus, SecurityClassification, Description

FROM FieldDefinition where EntityDefinition.QualifiedApiName IN :scopeObjectsList]);

Map<String, FieldDefinition> fieldDefinitionMap = new Map<String, FieldDefinition>();

//loop through and create a key for the fieldDefinitions

for(FieldDefinition fieldDef : fieldDefinitionList){

String fieldDefinitionKey = fieldDef.QualifiedApiName + '|' + fieldDef.EntityDefinition.QualifiedApiName;//create the same key as above this will be to see if any fields change

fieldDefinitionMap.put(fieldDefinitionKey,fieldDef);

}

Map<String, Id> grabObjectMap = new Map<String, Id>();

List<Data_Object__c> grabObjectIdList = [Select Id, Name from Data_Object__c where Name IN :scopeObjectsList];

//Loop through Object records to get a map for the field record to lookup to the Object record

for(Data_Object__c objectId : grabObjectIdList){

grabObjectMap.put(objectId.Name, objectId.Id);

}

//setup for matching fields - get list of tracked fields based on the metadata this way if any of the tracked fields change then the record will be updated

List<Data_Dictionary_Tracked_Field__mdt> trackedFieldsList = new List<Data_Dictionary_Tracked_Field__mdt>([Select Label from Data_Dictionary_Tracked_Field__mdt]);

List<String> trackedFieldsStringList = new List<String>();

//get the label (field api)

for(Data_Dictionary_Tracked_Field__mdt trackedField :trackedFieldsList){

trackedFieldsStringList.add(trackedField.Label);

}

List<Data_Field__c> addFieldList = new List<Data_Field__c>();

List<Data_Field__c> updateFieldList = new List<Data_Field__c>();

String createKey;

String fieldName;

//loop through all of the objects in the scope

for ( Schema.SObjectType typ : scope ) {

String sobjName = String.valueOf(typ);

Map <String, Schema.SObjectField> fieldMap = typ.getDescribe().fields.getMap();//get the object field map

//loop through all of the field values

for(Schema.SObjectField field : fieldMap.Values())

{

schema.describefieldresult describefield = field.getDescribe();//get field describe

fieldName = describefield.getName();//get field name

createKey = fieldName + '|' + sobjName;//create key to get current data field

Data_Field__c currentFieldValues = dataFieldsMap.get(createKey);

boolean dataFieldExists = dataFieldsKeyList.contains(createKey);

If(dataFieldExists){

//utility to help create the data field

Data_Field__c potentialNewDataField = DataDictionaryUtility.createNewDataField(fieldDefinitionMap.get(createKey), describefield, currentFieldValues.Id, currentFieldValues.Data_Object__r.Id);

boolean matching = DataDictionaryUtility.dataDictionaryFieldsMatching(dataFieldsMap.get(createKey), potentialNewDataField, trackedFieldsStringList);

if(!matching){//check if the fields are matching

updateFieldList.add(potentialNewDataField);

}

}else if(dataFieldExists == false){

Data_Field__c newDataField = DataDictionaryUtility.createNewDataField(fieldDefinitionMap.get(createKey), describefield);

newDataField.Data_Object__c = grabObjectMap.get(sobjName);

addFieldList.add(newDataField);

}

//remove from map

dataFieldsMap.remove(createKey);

}

}

/*for(Data_Field__c df :addFieldList){

system.debug(df.Name);

}*/

insert addFieldList;

update updateFieldList;

// delete remaining datafields in the map

//delete dataFieldsMap.values();

//to update the fields to deleted for a history record

for(Data_Field__c df :dataFieldsMap.values()){

df.Deleted__c = true;

}

update dataFieldsMap.values();

}

global void finish(Database.BatchableContext bc){

system.enqueueJob(new DataDictionaryRecordType_Queueable());

}

}Once the batch fields are created we need to create the Data Object Record Type object and fields.

Data Object Record Type Object and Queueable

Create the Data_Object_Record_Type__c object and add the following fields:

- Label

- Data Type

- Business_Process_Id__c

- Text (18)

- Created_By_Id__c

- Text (18)

- Created_By_Name__c

- TextArea

- Created_Date__c

- DateTime

- Data_Object__c

- MasterDetail (Data_Object__c)

- Description__c

- Long Text Area (3000)

- Developer_Name__c

- Text (80)

- Is_Active__c

- Checkbox

- Is_Person_Type__c

- Checkbox

- Last_Modified_By_Id__c

- Text (18)

- Last_Modified_By_Name_Text__c

- Text Area(255)

- Last_Modified_Date__c

- DateTime

- Namespace_Prefix__c

- Text (80)

- Record_Type_Id__c

- Text (18)

- Sobject_Type__c

- TextArea

- System_Modstamp__c

- DateTime

Next the following queueable can be created:

public class DataDictionaryRecordType_Queueable implements Finalizer, Queueable {

public void execute(QueueableContext context){

Map<String, Data_Object_Record_Type__c> dataObjRTMAP = new Map();

List<RecordType> rtList = [SELECT Id, Name, DeveloperName,

NamespacePrefix, Description, BusinessProcessId,

SobjectType, IsActive,

CreatedById,

CreatedBy.Name, CreatedDate, LastModifiedById,

LastModifiedBy.Name, LastModifiedDate, SystemModstamp

FROM RecordType];

// Get the dataObject Ids for linking to the parent

Map dataObjMap = new Map();

List dataObjList = [Select Id, Name from Data_Object__c];

for(Data_Object__c dataObj :dataObjList){

dataObjMap.put(dataObj.Name, dataObj.Id);

}

List<Data_Object_Record_Type__c> dataObjRTList = [SELECT Id, Name, Data_Object__c, Developer_Name__c,

Record_Type_Id__c, Namespace_Prefix__c, Description__c,

Business_Process_Id__c, Sobject_Type__c, Is_Active__c,

Created_By_Id__c, Created_By_Name__c,

Created_Date__c, Last_Modified_By_Id__c, Last_Modified_By_Name_Text__c,

Last_Modified_Date__c, System_Modstamp__c

FROM Data_Object_Record_Type__c];

for(Data_Object_Record_Type__c dataObjRT :dataObjRTList){

dataObjRTMAP.put(dataObjRT.Record_Type_Id__c, dataObjRT);

}

List<Data_Object_Record_Type__c> upsertDORTList = new List();

for(RecordType rt :rtList){

Data_Object_Record_Type__c oldDataObjRT = dataObjRTMAP.get(rt.Id);

if(oldDataObjRT != null){

dataObjRTMAP.remove(rt.Id);

}

Data_Object_Record_Type__c newDataObjRT = new Data_Object_Record_Type__c();

newDataObjRT.Developer_Name__c = rt.DeveloperName;

newDataObjRT.Record_Type_Id__c = rt.Id;

newDataObjRT.Namespace_Prefix__c = rt.NamespacePrefix;

newDataObjRT.Description__c = rt.Description;

newDataObjRT.Business_Process_Id__c = rt.BusinessProcessId;

newDataObjRT.Sobject_Type__c = rt.SobjectType;

newDataObjRT.Is_Active__c = rt.IsActive;

newDataObjRT.Created_By_Id__c = rt.CreatedById;

newDataObjRT.Created_By_Name__c = rt.CreatedBy.Name;

newDataObjRT.Created_Date__c = rt.CreatedDate;

newDataObjRT.Last_Modified_By_Id__c = rt.LastModifiedById;

newDataObjRT.Last_Modified_By_Name_Text__c = rt.LastModifiedBy.Name;

newDataObjRT.Last_Modified_Date__c = rt.LastModifiedDate;

newDataObjRT.System_Modstamp__c = rt.SystemModstamp;

newDataObjRT.Data_Object__c = dataObjMap.get(rt.SobjectType);

newDataObjRT.Name = rt.Name;

if(oldDataObjRT == null){

newDataObjRT.Data_Object__c = dataObjMap.get(rt.SobjectType);

upsertDORTList.add(newDataObjRT);

}else if(newDataObjRT.Name != oldDataObjRT.Name ||

newDataObjRT.Data_Object__c != oldDataObjRT.Data_Object__c ||

newDataObjRT.Developer_Name__c != oldDataObjRT.Developer_Name__c ||

newDataObjRT.Record_Type_Id__c != oldDataObjRT.Record_Type_Id__c ||

newDataObjRT.Namespace_Prefix__c != oldDataObjRT.Namespace_Prefix__c ||

newDataObjRT.Description__c != oldDataObjRT.Description__c ||

newDataObjRT.Business_Process_Id__c != oldDataObjRT.Business_Process_Id__c ||

newDataObjRT.Sobject_Type__c != oldDataObjRT.Sobject_Type__c ||

newDataObjRT.Is_Active__c != oldDataObjRT.Is_Active__c ||

newDataObjRT.Created_By_Id__c != oldDataObjRT.Created_By_Id__c ||

newDataObjRT.Created_By_Name__c != oldDataObjRT.Created_By_Name__c ||

newDataObjRT.Created_Date__c != oldDataObjRT.Created_Date__c ||

newDataObjRT.Last_Modified_By_Id__c != oldDataObjRT.Last_Modified_By_Id__c ||

newDataObjRT.Last_Modified_By_Name_Text__c != oldDataObjRT.Last_Modified_By_Name_Text__c ||

newDataObjRT.Last_Modified_Date__c != oldDataObjRT.Last_Modified_Date__c ||

newDataObjRT.System_Modstamp__c != oldDataObjRT.System_Modstamp__c){

newDataObjRT.Id = oldDataObjRT.Id;

upsertDORTList.add(newDataObjRT);

}

}

delete dataObjRTMAP.values();

upsert upsertDORTList;

DataDictionaryRecordType_Queueable DDRT = new DataDictionaryRecordType_Queueable();

System.attachFinalizer(DDRT);

}

// Finalizer implementation

public void execute(FinalizerContext ctx) {

String parentJobId = '' + ctx.getAsyncApexJobId();

if (ctx.getResult() == ParentJobResult.SUCCESS) {

DataDictionaryPicklist_Batch DDPB = new DataDictionaryPicklist_Batch();//batch for creating all picklist values

Id batchId = Database.executeBatch(DDPB);

}

}

} Data Dictionary Tracked Fields Metadata

The tracked fields metadata is the list of tracked fields used in the utility to identify which fields are to automatically be tracked for changes. Fields that are not included in this metadata list can be manually changed to track additional attributes not covered by the FieldDefinition object or the field describe.

Create a custom metadata type named: Data_Dictionary_Tracked_Field with the following label and developer name pairs (no need to create custom fields):

- Label

- DeveloperName

- Is_High_Scale_Number__c

- Is_High_Scale_Number

- Relationship_Order__c

- Relationship_Order

- Name__c

- Name_c

- Is_List_Filterable__c

- Is_List_Filterable

- Is_Indexed__c

- Is_Indexed

- Extra_Type_Info__c

- Extra_Type_Info

- Nillable__c

- Nillable

- Filterable__c

- Filterable

- HTML_Formatted__c

- HTML_Formatted

- Calculated__c

- Calculated

- Formula_Treat_Null_Number_As_Zero__c

- Formula_Treat_Null_Number_As_Zero

- Reference_To__c

- Reference_To

- Groupable__c

- Groupable

- Id

- Id

- Id_Lookup__c

- Id_Lookup

- Calculated_Formula__c

- Calculated_Formula

- Label__c

- Label

- External_Id__c

- External_Id

- Picklist_Values__c

- Picklist_Values

- Is_List_Sortable__c

- Is_List_Sortable

- Restricted_Delete__c

- Restricted_Delete

- Is_Polymorphic_Foreign_Key__c

- Is_Polymorphic_Foreign_Key

- Dependent_Picklist__c

- Dependent_Picklist

- Search_Prefilterable__c

- Search_Prefilterable

- Auto_Number__c

- Auto_Number

- Type__c

- Type

- Is_Compact_Layoutable__c

- Is_Compact_Layoutable

- AI_Prediction_Field__c

- AI_Prediction_Field

- Length__c

- Length

- Last_Modified_Date__c

- Last_Modified_Date

- Is_Api_Sortable__c

- Is_Api_Sortable

- Default_Value__c

- Default_Value

- Business_Status__c

- Business_Status

- Default_Value_Formula__c

- Default_Value_Formula

- Cascade_Delete__c

- Cascade_Delete

- Last_Modified_By_Id__c

- Last_Modified_By_Id

- Business_Owner_Id__c

- Business_Owner_Id

- Name_Field__c

- Name_Field

- Namespace_Prefix__c

- Namespace_Prefix

- Is_Name_Field__c

- Is_Name_Field

- Byte_Length__c

- Byte_Length

- Relationship_Name__c

- Relationship_Name

- Name_Pointing__c

- Name_Pointing

- Name

- Name

- Precision__c

- Precision

- Is_Compound__c

- Is_Compound

- Unique__c

- Unique

- Defaulted_On_Create__c

- Defaulted_On_Create

- Restricted_Picklist__c

- Restricted_Picklist

- Is_Workflow_Filterable__c

- Is_Workflow_Filterable

- Entity_Definition_Id__c

- Entity_Definition_Id

- Description__c

- Description

- Write_Requires_Master_Read__c

- Write_Requires_Master_Read

- Soap_Type__c

- Soap_Type

- Digits__c

- Digits

- Inline_Help_Text__c

- Inline_Help_Text

- Deprecated_and_Hidden__c

- Deprecated_and_Hidden

- Is_List_Visible__c

- Is_List_Visible

- Controlling_Field_Definition_Id__c

- Controlling_Field_Definition_Id

- Scale__c

- Scale

- Permissionable__c

- Permissionable

- Local_Name__c

- Local_Name

- Custom__c

- Custom

- Is_Field_History_Tracked__c

- Is_Field_History_Tracked

- Durable_Id__c

- Durable_Id

- Controller__c

- Controller

- Security_Classification__c

- Security_Classification

- SObject_Field__c

- SObject_Field

- Data_Type__c

- Data_Type

The Data Dictionary batch class requires a helper utility class:

Data Dictionary Utility

The utility class performs several functions:

- Creating a new data field

- Getting the picklist values

- Used with the data record type picklist records

- Identify the matching data field records

- Compared to a combination record of the field definition and the field describe

- Identify the data fields in scope that are picklist type fields

- Create the Data field record type picklists

- Lightning Web Component Button Functions

public class DataDictionaryUtility {

public static Data_Field__c createNewDataField(FieldDefinition fieldDef, schema.describefieldresult describeField){

Data_Field__c newDataField = new Data_Field__c();

if(describeField != null){

newDataField.Name = describeField.getName();

newDataField.Name__c = describeField.getName();

newDataField.Length__c = describeField.getLength();

newDataField.AI_Prediction_Field__c = describeField.isAiPredictionField();

newDataField.Calculated_Formula__c = String.valueOf(describeField.getCalculatedFormula());

newDataField.Default_Value__c = String.valueOf(describeField.getDefaultValue());

newDataField.Deprecated_and_Hidden__c = describeField.isDeprecatedAndHidden();

newDataField.Formula_Treat_Null_Number_As_Zero__c = describeField.isFormulaTreatNullNumberAsZero();

newDataField.Inline_Help_Text__c = String.valueOf(describeField.getInlineHelpText());

newDataField.Permissionable__c = describeField.isPermissionable();

String referenceTo;

if(describefield.getReferenceTo().size()>0){

referenceTo = String.ValueOf(describefield.getReferenceTo());

}

newDataField.Reference_To__c = referenceTo;

newDataField.Restricted_Picklist__c = describeField.isRestrictedPicklist();

newDataField.SObject_Field__c = String.valueOf(describeField.getSobjectField());

newDataField.Auto_Number__c = describeField.isAutoNumber();

newDataField.Cascade_Delete__c = describeField.isCascadeDelete();

newDataField.Default_Value_Formula__c = String.valueOf(describeField.getDefaultValueFormula());

newDataField.Digits__c = describeField.getDigits();

newDataField.Label__c = describeField.getLabel();

newDataField.Name_Field__c = describeField.isNameField();

//getpicklist from method

newDataField.Picklist_Values__c = getPicklistValues(describefield.getPicklistValues());

newDataField.Type__c = String.valueOf(describeField.getType());

newDataField.Byte_Length__c = describeField.getByteLength();

newDataField.Controller__c = String.valueOf(describeField.getController());

newDataField.Defaulted_On_Create__c = describeField.isDefaultedOnCreate();

newDataField.External_Id__c = describeField.isExternalId();

newDataField.Name_Pointing__c = describeField.isNamePointing();

newDataField.Unique__c = describeField.isUnique();

newDataField.Relationship_Order__c = describeField.getRelationshipOrder();

newDataField.Restricted_Delete__c = describeField.isRestrictedDelete();

newDataField.Soap_Type__c = String.valueOf(describeField.getSoapType());

newDataField.Write_Requires_Master_Read__c = describeField.isWriteRequiresMasterRead();

newDataField.Id_Lookup__c = describeField.isIdLookup();

newDataField.Custom__c = describeField.isCustom();

newDataField.Dependent_Picklist__c = describeField.isDependentPicklist();

}

if(fieldDef != null){

if(String.isBlank(newDataField.Name)){

system.debug('newDataField.Name is blank'+newDataField.Name);

newDataField.Name = fieldDef.QualifiedApiName;

}

system.debug(fieldDef.QualifiedApiName);

newDataField.Durable_Id__c = fieldDef.DurableId;

newDataField.Name__c = fieldDef.QualifiedApiName;

newDataField.Entity_Definition_Id__c = fieldDef.EntityDefinitionId;

newDataField.Namespace_Prefix__c = fieldDef.NamespacePrefix;

newDataField.Local_Name__c = fieldDef.Label;

if(newDataField.Length__c == null){

system.debug('newDataField.Length__c is blank'+newDataField.Name);

newDataField.Length__c = fieldDef.Length;

}

newDataField.Data_Type__c = fieldDef.DataType;

newDataField.Extra_Type_Info__c = fieldDef.ExtraTypeInfo;

newDataField.Calculated__c = fieldDef.IsCalculated;

newDataField.Is_High_Scale_Number__c = fieldDef.IsHighScaleNumber;

newDataField.HTML_Formatted__c = fieldDef.IsHtmlFormatted;

newDataField.Is_Name_Field__c = fieldDef.IsNameField;

newDataField.Nillable__c = fieldDef.IsNillable;

newDataField.Is_Workflow_Filterable__c = fieldDef.IsWorkflowFilterable;

newDataField.Is_Compact_Layoutable__c = fieldDef.IsCompactLayoutable;

newDataField.Precision__c = String.ValueOf(fieldDef.Precision);

newDataField.Scale__c = fieldDef.Scale;

newDataField.Is_Field_History_Tracked__c = fieldDef.IsFieldHistoryTracked;

newDataField.Is_Indexed__c = fieldDef.IsIndexed;

newDataField.Filterable__c = fieldDef.IsApiFilterable;

newDataField.Is_Api_Sortable__c = fieldDef.IsApiSortable;

newDataField.Is_List_Filterable__c = fieldDef.IsListFilterable;

newDataField.Is_List_Sortable__c = fieldDef.IsListSortable;

newDataField.Groupable__c = fieldDef.IsApiGroupable;

newDataField.Is_List_Visible__c = fieldDef.IsListVisible;

newDataField.Controlling_Field_Definition_Id__c = fieldDef.ControllingFieldDefinitionId;

newDataField.Last_Modified_Date__c = String.ValueOf(fieldDef.LastModifiedDate);

newDataField.Last_Modified_By_Id__c = fieldDef.LastModifiedById;

newDataField.Relationship_Name__c = fieldDef.RelationshipName;

newDataField.Is_Compound__c = fieldDef.IsCompound;

newDataField.Search_Prefilterable__c = fieldDef.IsSearchPrefilterable;

newDataField.Is_Polymorphic_Foreign_Key__c = fieldDef.IsPolymorphicForeignKey;

newDataField.Business_Owner_Id__c = fieldDef.BusinessOwnerId;

newDataField.Business_Status__c = fieldDef.BusinessStatus;

newDataField.Security_Classification__c = fieldDef.SecurityClassification;

newDataField.Description__c = fieldDef.Description;

}

return newDataField;

}

public static Data_Field__c createNewDataField(FieldDefinition fieldDef, schema.describefieldresult describeField, Id dataFieldId, Id dataObjectId){

Data_Field__c potentialDataField = createNewDataField(fieldDef, describeField);

potentialDataField.Data_Object__c = dataObjectId;

try{

potentialDataField.Id = dataFieldId;

}catch(System.NullPointerException e){

system.debug('PointerException: '+potentialDataField);//do I need?

}

return potentialDataField;

}

public static String getPicklistValues(List<PicklistEntry> currentPickListEntryList){

String pickListString;

if(currentPickListEntryList != null){

List<String> pickListStringList = New List<String>();

String test = String.ValueOf(currentPickListEntryList);

for(Schema.PicklistEntry picklistEntry : currentPickListEntryList){

pickListStringList.add(picklistEntry.getValue());

}

//Picklist needs to be null if it is for the compare or it will throw the update

If(!pickListStringList.isEmpty()){

pickListString = String.join(pickListStringList, ', ');

}

//check length if over the field length will get an error outbound of bounds, this prevents it

If(pickListString != null){

pickListString = pickListString.abbreviate(131000);

}

}

return pickListString;

}

//used to check if the data field record fields are matching

public static Boolean dataDictionaryFieldsMatching(Data_Field__c dataField, Data_Field__c potentialNewDataField, List<String> trackedFieldsStringList){

boolean isMatching = true;

if(dataField != null && !trackedFieldsStringList.isEmpty()){

datafield.Data_Object__r = null;

for(String objectField : trackedFieldsStringList){

String dataFieldString = String.valueOf(dataField.get(objectField));

String potentialNewDataFieldString = String.ValueOf(potentialNewDataField.get(objectField));

// .equals would give null pointer exception

if(dataFieldString != potentialNewDataFieldString){

isMatching = false;

break;

}

}

}

return isMatching;

}

public static DataDictionaryObjects.DataFieldScopeWrapper dataFieldsInScope(List<RecordType> dataRecordsInScope){

Set<String> sObjectsInScope = new Set<String>();

Set<Id> recordTypeIds = new Set<Id>();

Map<String, Id> dataFieldsMap = new Map<String,Id>();

for(RecordType dataRecord :dataRecordsInScope){

sObjectsInScope.add(dataRecord.SobjectType);

recordTypeIds.add(dataRecord.Id);

system.debug('dataRecord: '+dataRecord);

}

system.debug('sObjectsInScope: '+sObjectsInScope);

List<Data_Field__c> dataFieldList = [Select Id, Name, Data_Object__r.Name

from Data_Field__c

where Data_Object__r.Name IN :sObjectsInScope

AND Type__c IN ('COMBOBOX', 'MULTIPICKLIST', 'PICKLIST')];

//loop through and put DataField Key and Id in Map

for(Data_Field__c dataField :dataFieldList){

dataFieldsMap.put(dataField.Data_Object__r.Name+'|'+dataField.Name, dataField.Id);

}

system.debug('dataFieldsMap: '+dataFieldsMap);

DataDictionaryObjects.DataFieldScopeWrapper wrapper = new DataDictionaryObjects.DataFieldScopeWrapper();

wrapper.dataFieldsIdMap = dataFieldsMap;

wrapper.ScopeRecordTypeIdSet = recordTypeIds;

return wrapper;

}

public static Data_Field_Record_Type_Picklist__c createDFRTP(DataDictionaryObjects.ValuesClass valueItem, Id DataFieldId, Id DataObjRTId){

Data_Field_Record_Type_Picklist__c newDFRTP = new Data_Field_Record_Type_Picklist__c();

newDFRTP.Name = valueItem.label;

newDFRTP.Attributes__c = String.ValueOf(valueItem.attributes);

if(DataFieldId != null){

newDFRTP.Data_Field__c = DataFieldId;

}else{

system.debug('Null Id: '+DataFieldId);

}

newDFRTP.Data_Object_Record_Type__c = DataObjRTId;

newDFRTP.Picklist_API_Name__c = valueItem.value;

return newDFRTP;

}

@AuraEnabled(cacheable=true)

public static Id submitDataObjectBatch(){

DataDictionaryObjects_Batch dObjBatch = new DataDictionaryObjects_Batch();

Id batchId = Database.executeBatch(dObjBatch);

return batchId;

}

@AuraEnabled(cacheable=true)

public static Id submitDataPicklistBatch(){

system.debug('here');

DataDictionaryPicklist_Batch dPickBatch = new DataDictionaryPicklist_Batch();

Id batchId = Database.executeBatch(dPickBatch);

return batchId;

}

}At this point you will get several errors from the utility class which we will be covered next with the creation of the Data Field Record Type Picklist object.

Data Field Record Type Picklist Object, Batch, and Helper Classes

Create a custom object; Label: Data Field Record Type Picklist, API Name: Data_Field_Record_Type_Picklist__c. This object will link the record types and fields with the picklist value for each.

After creating the object create the fields. This object won’t have much as it is a junction object mainly to show the picklist values for each record type and field:

- DevName

- Data Type

- Data_Field__c

- Master-Detail(Data Field)

- Data_Object_Record_Type__c

- Master-Detail(Data Object Record Type)

- Attributes__c

- Long Text Area(32768)

- Picklist_API_Name__c

- Text Area(255)

- Record_Type__c

- Text(18)

Next create the batch class for creating and linking the picklist values to the record type and the data fields:

Data Dictionary Picklist Batch

The batch class will loop through all of the record types in the org and use the ui-api to get all of the record type picklist values. Requiring a helper class to facilitate the HTTP callout.

global class DataDictionaryPicklist_Batch implements Database.Batchable<sObject>, Database.AllowsCallouts {

global Iterable<sObject> start(Database.BatchableContext bc){

List<RecordType> RTList = [SELECT Id, Name, DeveloperName, NamespacePrefix,

Description, BusinessProcessId, SobjectType,

IsActive,

CreatedById, CreatedDate,

LastModifiedById, LastModifiedDate, SystemModstamp

FROM RecordType];

return RTList;

}

global void execute(Database.BatchableContext bc, List<RecordType> scope){

//map for Getting DataObjectRecordType Id

Map<Id,Id> dataObjRTMap = new Map<Id,Id>();

for(Data_Object_Record_Type__c dataObjRT :[Select Id, Record_Type_Id__c from Data_Object_Record_Type__c]){

dataObjRTMap.put(dataObjRT.Record_Type_Id__c, dataObjRT.Id);

}

//get map of all data fields in scope used to get the DataField Id for creating a picklist value and associate to the

DataDictionaryObjects.DataFieldScopeWrapper scopeWrapper = DataDictionaryUtility.dataFieldsInScope(scope);

Map<String, Id> dataFieldsIdMap = scopeWrapper.dataFieldsIdMap;

//Ids used for DataFieldDelete SOQL

Set<Id> scopeRecordTypeIdSet = scopeWrapper.ScopeRecordTypeIdSet;

//current Picklist Fields

List<Data_Field_Record_Type_Picklist__c> currentRTPList = new List<Data_Field_Record_Type_Picklist__c>([Select Id, Name, Picklist_API_Name__c,

Data_Field__r.Name, Data_Field__r.Data_Object__r.Name,

Data_Object_Record_Type__c from Data_Field_Record_Type_Picklist__c

where Data_Field__c IN :dataFieldsIdMap.values()

AND Data_Object_Record_Type__r.Record_Type_Id__c IN :scopeRecordTypeIdSet]);

//map with the current Picklist values based on a key with the CurrentRTPList - when checked for a current VCItem will be removed and used as a delete list

Map<String, Data_Field_Record_Type_Picklist__c> currentDFRTPMap = new Map<String, Data_Field_Record_Type_Picklist__c>();

//If not in the currentDFRTPMap will be added to the insert List

List<Data_Field_Record_Type_Picklist__c> insertDFRTP = new List<Data_Field_Record_Type_Picklist__c>();

for(Data_Field_Record_Type_Picklist__c currentDFRTP : currentRTPList){

//used to Identify if the value is a current value

String currentKey = currentDFRTP.Data_Field__r.Id+'|'+currentDFRTP.Data_Object_Record_Type__c+'|'+currentDFRTP.Name+'|'+currentDFRTP.Picklist_API_Name__c;

currentDFRTPMap.put(currentKey, currentDFRTP);

}

for(RecordType RT :scope){

Map<String, Object> untype = HttpClass.uiAPIResponse(RT.SobjectType, RT.Id, UserInfo.getSessionId());

//Used for gettting the datafield Id in a map

String dataObjectName = RT.SobjectType;

//get the picklist values

try{

Map<String,Object> pickFieldUntype = (Map<String,Object>)untype.get('picklistFieldValues');

//need to loop through the keyset to be able to get the keyset and the values of the map

for(String loopValueKeySet :pickFieldUntype.keySet()){

Object loopValue = pickFieldUntype.get(loopValueKeySet);

//used for a key with DataObject name to get the field Id

String dataFieldName = loopValueKeySet;

String dataFieldKey = dataObjectName+'|'+loopValueKeySet;

Map<String,Object> loopValMap = (Map<String,Object>)loopValue;

String serial = JSON.serialize(loopValMap.get('values'));

List<DataDictionaryObjects.ValuesClass> vclist = (List<DataDictionaryObjects.ValuesClass>)JSON.deserialize(serial, List<DataDictionaryObjects.ValuesClass>.class);

system.debug(vclist);

for(DataDictionaryObjects.ValuesClass vcItem :vcList){

Data_Field_Record_Type_Picklist__c vcDFRTP = DataDictionaryUtility.createDFRTP(vcItem, dataFieldsIdMap.get(dataFieldKey), dataObjRTMap.get(RT.Id));

String key = vcDFRTP.Data_Field__c +'|'+vcDFRTP.Data_Object_Record_Type__c+'|'+vcDFRTP.Name+'|'+vcDFRTP.Picklist_API_Name__c;

If(currentDFRTPMap.get(key) == null){

insertDFRTP.add(vcDFRTP);

}else{

currentDFRTPMap.remove(key);

}

}

}

}catch(Exception e){

system.debug('Exception Error Message: '+e.getMessage());

continue;

}

}

insert insertDFRTP;

delete currentDFRTPMap.values();

}

global void finish(Database.BatchableContext bc){

}

}Http Helper

The HttpClass helper is used to build a REST Callout to the same org grabbing all of the picklist values for each record type.

public class HttpClass {

//need to take off 'Enforce login IP ranges on every request' in Session settings

public static Map<String,Object> uiAPIResponse(String recordTypesObject, Id recordTypeId, String token){

//use the ui-api to get the picklist values for each record type

String testURL = URL.getCurrentRequestUrl().toExternalForm();

system.debug(testURL);

String ENDPOINT_UIAPI = Url.getOrgDomainUrl().toExternalForm()+ '/services/data/v51.0/ui-api/object-info/'+recordTypesObject+'/picklist-values/'+recordTypeId;

String METHOD_UIAPI = 'GET';

Map<String, Object> untype = new Map<String,Object>();

//create headers

List<DataDictionaryObjects.HeaderObject> headerListUIAPI = new List<DataDictionaryObjects.HeaderObject>();

DataDictionaryObjects.HeaderObject headerObjAuthUIAPI = new DataDictionaryObjects.HeaderObject();

headerObjAuthUIAPI.key = 'Authorization';

headerObjAuthUIAPI.value = 'Bearer '+token;

headerListUIAPI.add(headerObjAuthUIAPI);

DataDictionaryObjects.HeaderObject headerObjContent = new DataDictionaryObjects.HeaderObject();

headerObjContent.key = 'Content-Type';

headerObjContent.value = 'application/json';

headerListUIAPI.add(headerObjContent);

HttpResponse UIAPI_RESPONSE = callEndpoint(ENDPOINT_UIAPI, METHOD_UIAPI, headerListUIAPI);

system.debug(UIAPI_RESPONSE.getBody());

if(UIAPI_RESPONSE.getStatusCode() == 200){

untype = (Map<String, Object>)JSON.deserializeUntyped(UIAPI_RESPONSE.getBody());

}else{

untype = null;

}

return untype;

}

public static HttpResponse callEndpoint(String endPoint, String method, List<DataDictionaryObjects.HeaderObject> headerObj) {

List<DataDictionaryObjects.HeaderObject> headerList = new List<DataDictionaryObjects.HeaderObject>();

headerList = headerObj;

Http http = new Http();

HttpRequest request = new HttpRequest();

request.setEndpoint(endPoint);

request.setMethod(method.toUpperCase());

for(DataDictionaryObjects.HeaderObject headerItem : headerObj){

request.setHeader(headerItem.key, headerItem.value);

}

HttpResponse response = http.send(request);

return response;

}

}Along with the utility and http helper class we will need to create a couple of apex class used throughout the batches.

Custom Data Dictionary Objects Class

These custom classes help to facilitate data in across the batches.

public class DataDictionaryObjects {

public class ValuesClass{

public Attributes attributes;

public String label;

public List<string> validFor;

public String value;

}

//needed to create this blank object so the attributes field is not strongly typed, for attributes tried List, Object, sObject, String none worked all produced errors

public class Attributes{}

public class DataFieldScopeWrapper{

public Map<string, id> dataFieldsIdMap;

public Set<id> ScopeRecordTypeIdSet;

}

public class HeaderObject {

public String key;

public String value;

}

} With the batch complete it is time to create the batch scheduler and set it to run everyday.

Data Scheduler

global class DataDictionarySchedule_Batch implements Schedulable{

global void execute(SchedulableContext sc)

{

DataDictionaryObjects_Batch DDOFB = new DataDictionaryObjects_Batch();

Id batchId = Database.executeBatch(DDOFB);

}

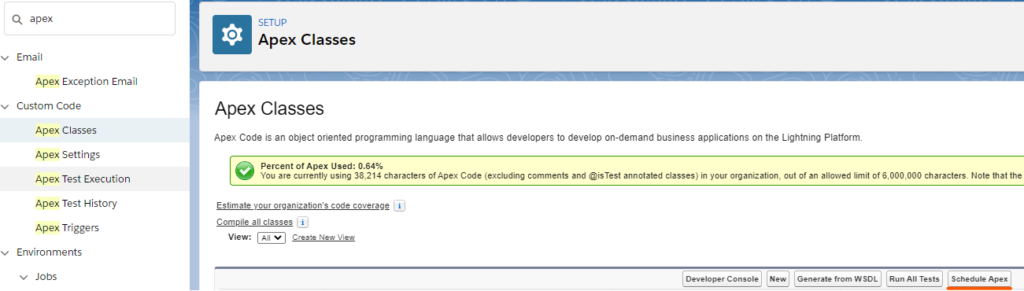

}This scheduler will be used to make sure that any changes throughout the day to the org’s metadata is captured. In order to do this in Setup go to ‘Apex Classes’ –> Schedule Apex –> Select the DataDictionarySchedule_Batch class and input relevant fields.

We now have the ability to have a living Data Dictionary. This will update every day at the specified time with any changes, adds, or deletes. Having just a scheduler doesn’t give the quickest response time in case you would like to output a report or if this is used in your CI/CD pipeline. Also one of the big uses in create this within Salesforce is being able to create reports and have permissions so only certain people can make changes in the org but everyone can view.

Ad Hoc Utility Bar Lightning Web Component

Creating a LWC for the data dictionary to be used an an app utility bar will help to quickly update the org with any new changes that have just occurred.

HTML

<template>

<lightning-button variant="brand-outline" label="Fire Data Object Batch Job" title="Fire Data Object Batch Job" onclick={handleDataObjectClick} class="slds-p-around_medium slds-align_absolute-center"></lightning-button>

<lightning-button variant="brand-outline" label="Fire Data Picklist Batch Job" title="Fire Data Picklist Batch Job" onclick={handleDataPicklistClick} class="slds-p-around_medium slds-align_absolute-center"></lightning-button>

</template>Javascript

import { LightningElement } from 'lwc';

import submitDataObjectBatch from '@salesforce/apex/DataDictionaryUtility.submitDataObjectBatch';

import submitDataPicklistBatch from '@salesforce/apex/DataDictionaryUtility.submitDataPicklistBatch';

import { ShowToastEvent } from 'lightning/platformShowToastEvent'

export default class DataDictionaryUtility extends LightningElement {

//doesn't fire new batches right away if the buttons are clicked to quickly next to each other

toast = {};

newObjectId; //used to track if a new batch id is created

oldObjectId; //used to track old batch id

newPicklistId; //used to track if a new batch id is created

oldPicklistId; //used to track old batch id

handleDataObjectClick() {

submitDataObjectBatch().then(result => {

this.newObjectId = result;

if(this.newObjectId != this.oldObjectId){

this.oldObjectId = result;

this.toast.message = 'Data Picklist Batch has started';

this.toast.variant = 'success';

this.showToast(this.toast);

}else{

this.toast.message = 'Data Object Batch has not started try again in a little while or refresh the page';

this.toast.variant = 'warning';

this.showToast(this.toast);

}

}).catch( error => {

this.toast.message = `An error occured: ${error}`;

this.toast.variant = 'error';

this.showToast(this.toast);

});

}

handleDataPicklistClick() {

submitDataPicklistBatch().then(result => {

this.newPicklistId = result;

if(this.newPicklistId != this.oldPicklistId){

this.oldPicklistId = result;

this.toast.message = 'Data Picklist Batch has started';

this.toast.variant = 'success';

this.showToast(this.toast);

}else{

this.toast.message = 'Data Picklist Batch has not started try again in a little while or refresh the page';

this.toast.variant = 'warning';

this.showToast(this.toast);

}

}).catch( error => {

this.toast.message = `An error occured: ${error}`;

this.toast.variant = 'error';

this.showToast(this.toast);

});

}

showToast(obj){

const event = new ShowToastEvent({

message: obj.message,

variant: obj.variant

});

this.dispatchEvent(event);

}

}Meta

<?xml version="1.0" encoding="UTF-8"?>

<LightningComponentBundle xmlns="http://soap.sforce.com/2006/04/metadata">

<apiVersion>51.0</apiVersion>

<isExposed>true</isExposed>

<masterLabel>Data Dictionary Utility</masterLabel>

<targets>

<target>lightning__UtilityBar</target>

</targets>

</LightningComponentBundle>Since the data records reside in Salesforce you can create countless reports such as who is creating the records, what records have been added or updated within the last week, which ones are missing manually tracked attributes, and receive alerts to send to others across the company.

In addition to reporting this can be implemented in a sandbox. This would provide the ability to implement in your CI/CD plan (especially for large enterprises). Instead of developers creating the fields in their sandbox and promoting up, causing the potential for a miss spelling or incorrect field everything can be first created in the sandbox. Then once the individuals responsible for creating the field and if permission sets are in assigning to permission sets the developer can grab from the designated org. The added bonus to having it in a sandbox is that you would be able to create different permission set and control who can create fields and who can just read.

Link to Github repo this is a work in progress along with the article.

Checkout My New Course:

Crush It As A Salesforce Developer: Mastering Apex

Test Your Knowledge:

Try the new Salesforce Developer Quiz

17 comments on “Creating a Living Data Dictionary in Salesforce”

People who do not have technical skills can use tools ready for this, such as Atlan, AbstraLinx, Schema Lister, and Metazoa…

I am not familiar with the ones you have listed, it is great to see other options. Thank you! This was more something the didn’t require an external system that would also could keep track of changes.

Thank you ever thus for you article post.Really thank you! save writing.

This is really great! Thank you for documenting and making it available!

I have a question regarding the formula field Object_Manager__c on the Data_Field__c object. What should the value of the formula be?

Thank you! It is:

IF( Right(Data_Object__r.Name, 1) == ‘e’,

HYPERLINK(“/lightning/setup/EventObjects/page?address=/”&Right(Durable_Id__c,Len(Durable_Id__c)-Find(“.”, Durable_Id__c))&”?setupid=EventObjects”, Name__c&” Object Manager Link” , “_self”),

HYPERLINK(“/lightning/setup/ObjectManager/”&Left(Durable_Id__c,Find(“.”, Durable_Id__c)-1)&”/FieldsAndRelationships/”&Right(Durable_Id__c,Len(Durable_Id__c)-Find(“.”, Durable_Id__c))&”/view”, Name__c&” Object Manager Link” , “_self”))

I’m getting an error with the first class

Error: Compile Error: Invalid type: DataDictionaryFields_Batch at line 105 column 9

Any ideas?

The DataDictionaryFields_Batch class needs to be created in the org.

Hello! Can you highlight how is this solution different from the App Exchange – Config Workbook?

From the looks of it the Config Workbook seems to be more about permissions, there is a component about the fields but I am not sure how much detail it provides. It is a managed package so you would be able to do any customizations and there is a cost associated.

This is awesome! Great job!

Thank you, I appreciate it!

An impressive share! I have just forwarded this onto a friend who had been conducting a little homework on this. And he in fact ordered me dinner because I stumbled upon it for him… lol. So let me reword this…. Thank YOU for the meal!! But yeah, thanks for spending the time to talk about this subject here on your web page.

Thank you! I appreciate the feedback and letting me know it has been helpful!

Copy and paste the code and then you get nothing but errors…..

Thank you, there was a section that was missing. I have updated that which should clear it up. Aside from that the classes are interdependent on one another. If using Dev Console to try to save all of them you will need to comment the references to the other classes such the Dataabase.executeBatch() methods and then uncomment after all are saved. I added in the repo which can be deployed directly into the org.

This is awesome! but, I cant get past the first class. There may be cut/paste issues on my side. Is this available in github?

Unexpected token ‘currentObjectNamesList’

I created and added a link to a GitHub repo(bottom of the post). The repo may have a few more things in it as I am adding to it and will continue to be updating the blog to help explain as I am adding however I can’t guarantee timeliness. There was an error with the post the list data types were not coming through correct ex. List<String> was coming up as List this should be resolved now when copying from the article.